Search This Supplers Products:CAPACITORAC MOTOR RUNNING CAPACITORFAN CAPACITORAIR CONDITION CAPACITORMOTOR STARTING CAPACITORSOLAR PUMP

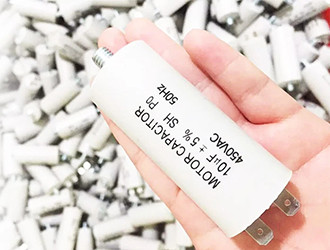

Dingfeng Capacitor---What does the Voltage Rating on a Capacitor Mean?

time2019/04/12

- The voltage rating on a capacitor is the maximum amount of voltage that a capacitor can safely be exposed to and can store.

The voltage rating on a capacitor is the maximum amount of voltage that a capacitor can safely be exposed to and can store.

Remember that capacitors are storage devices. The main thing you need to know about capacitors is that they store X charge at X voltage; meaning, they hold a certain size charge (1µF, 100µF, 1000µF, etc.) at a certain voltage (10V, 25V, 50V, etc.). So when choosing a capacitor you just need to know what size charge you want and at which voltage.

E-mail/Skype: info..........com

Tel/WhatsApp: +86 15057271708

Wechat: 13857647932

Skype: Mojinxin124